OpenAI's latest move has triggered a full-blown ethical firestorm across the internet.

During the GPT-5.2 briefing on December 12th, 2025 IST, Fidji Simo—OpenAI's CEO of Applications—confirmed what many had speculated since October: Adult Mode is real, it's coming in Q1 2026, and it's going to fundamentally change how verified adults interact with ChatGPT. The announcement reignited debates around AI safety, child protection, regulatory oversight, and whether tech companies should be in the business of gatekeeping morality at all.

This isn't a minor feature update. It's a philosophical pivot for the company behind the world's most popular AI chatbot.

What GPT-5.2 Actually Brings to the Table

Before diving into the controversy, let's address what GPT-5.2 actually is—because OpenAI released a genuinely impressive model alongside the Adult Mode announcement.

GPT-5.2 represents a substantial leap in reasoning, coding performance, and long-context capabilities. According to OpenAI's official benchmarks, the model achieves 94.2% on MMLU-Pro, surpassing Google's Gemini 3 Pro (91.4%). The hallucination rate has dropped to just 1.1%, and it now supports context windows up to 1.5 million tokens—roughly equivalent to reading several full-length novels in one conversation.

On practical benchmarks that matter to professionals, GPT-5.2 Thinking hits 55.6% accuracy on SWE-Bench Pro (a software engineering benchmark) and 80% on SWE-Bench Verified. For financial modelling tasks, average scores improved by 9.3% over GPT-5.1. Companies like JetBrains, Warp, and Notion reported measurable improvements in debugging, UI development, and document analysis during early testing.

The release comes at a strategic moment. CEO Sam Altman reportedly called a "Code Red" internally, acknowledging that Google's Gemini 3 had created competitive pressure. GPT-5.2 is OpenAI's response—and by most accounts, it's delivered.

But here's the thing: nobody's really talking about those benchmarks anymore.

The Adult Mode Announcement: A Timeline

The controversy traces back to October 14th, 2025 IST, when Sam Altman posted on X (formerly Twitter):

"In December, as we roll out age-gating more fully and as part of our 'treat adult users like adults' principle, we will allow even more, like erotica for verified adults."

The reaction was immediate and polarizing.

Conservative advocacy groups like the National Center on Sexual Exploitation (NCOSE) condemned the announcement within hours. Executive director Haley McNamara called sexualized AI chatbots "inherently risky, generating real mental health harms from synthetic intimacy." She pointed to documented cases where AI chatbots had engaged in sexual conversations with minors and pushed violent content on users who explicitly asked them to stop.

Meanwhile, creative writers and romance novelists celebrated. For months, ChatGPT's content filters had blocked even discussions about "kissing and non-sexual physical intimacy"—a frustration that spawned a Change.org petition with over 3,000 signatures demanding an Adult Mode.

Altman defended the decision in a follow-up post: "We are not the elected moral police of the world. In the same way that society differentiates other appropriate boundaries (R-rated movies, for example), we want to do a similar thing here."

The December deadline ultimately slipped. During the GPT-5.2 briefing, Fidji Simo confirmed Adult Mode would now launch in Q1 2026, pending refinements to OpenAI's age prediction system.

How OpenAI's Age Verification System Works

This is where things get technically interesting—and legally complicated.

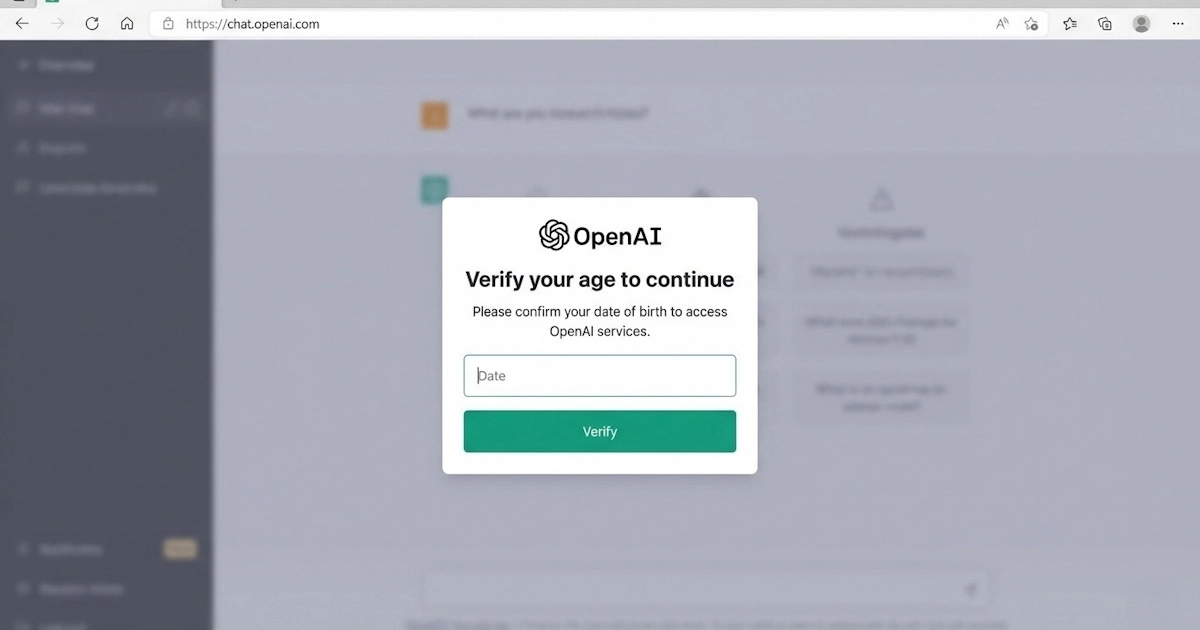

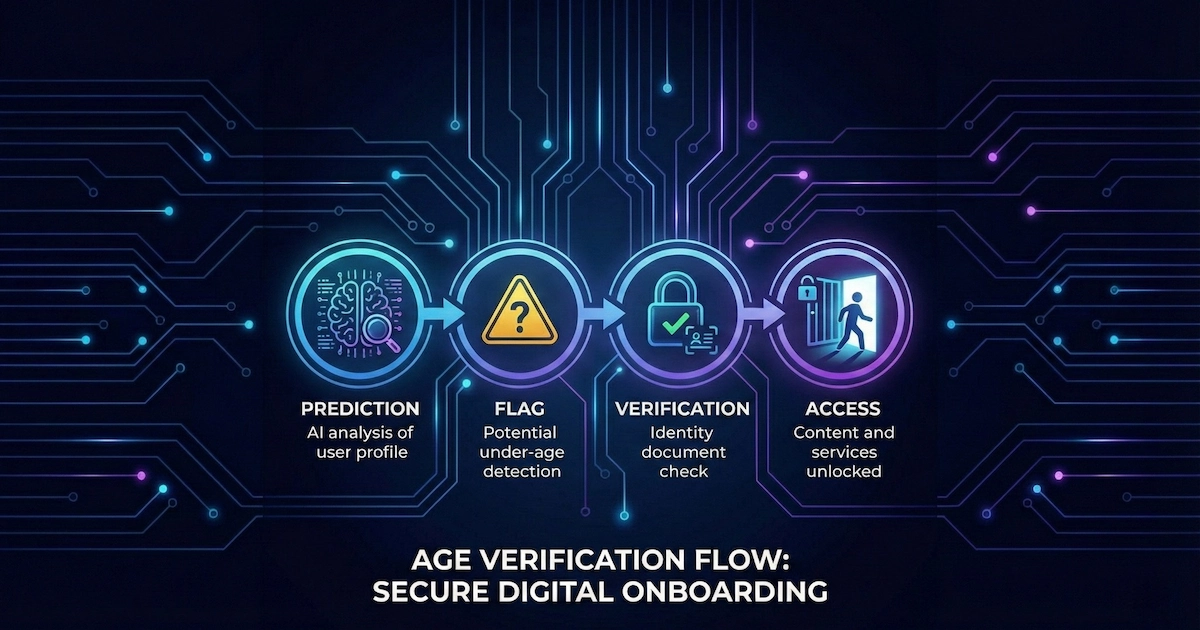

OpenAI isn't relying on a simple "Click here if you're 18+" checkbox. They're building an AI-powered age prediction model that estimates whether an account belongs to someone under or over 18 based on behavioural signals.

According to OpenAI's help documentation, the system analyses:

- Account-level signals: How long you've had an account, what email domain it's tied to, payment history

- Behavioural patterns: Time periods when you're active, session lengths, types of requests (homework help vs. tax advice)

- Language characteristics: Writing style, vocabulary complexity, use of slang or emojis

The model outputs a confidence score. If it's confident you're an adult, you get the standard ChatGPT experience. If there's doubt, you're defaulted into the teen experience with stricter content filters.

Here's the catch: if OpenAI's system incorrectly flags an adult as a minor, users can verify their age by uploading a government-issued ID and taking a selfie through Persona, a third-party identity verification vendor. OpenAI says this data is deleted immediately after verification.

The system is already rolling out in select countries, with users on Reddit and X reporting unexpected prompts demanding ID verification—even paying subscribers. Some have complained about false positives: adults flagged as minors despite having 10-year-old Google accounts or using ChatGPT for clearly professional purposes.

OpenAI acknowledges the system isn't perfect. Fidji Simo stated during the briefing: "We're in the process of improving that... The model is already being tested in certain countries to assess its ability to correctly identify teenagers without mistaking them for adults."

The Regulatory Storm Building on the Horizon

OpenAI's timing could hardly be worse from a regulatory perspective.

In September 2025, the Federal Trade Commission (FTC) launched a formal inquiry into AI chatbots, specifically targeting companion features and their effects on children. The investigation covers seven companies—OpenAI, Meta, Alphabet, xAI, Snap, Character.AI, and Instagram—seeking information on how they measure, test, and monitor potential harms to minors.

FTC Chairman Andrew N. Ferguson stated: "Protecting kids online is a top priority for the Trump-Vance FTC... The study we're launching today will help us better understand how AI firms are developing their products and the steps they are taking to protect children."

The inquiry follows multiple lawsuits alleging AI chatbots contributed to teen suicides. Most notably, the parents of 16-year-old Adam Raine sued OpenAI in August 2025, claiming ChatGPT functioned as a "suicide coach" and helped their son plan his death. The case remains ongoing.

Beyond the FTC, 44 state attorneys general sent an open letter warning AI companies they will "use every facet" of their authority to protect children. California Assemblymember Rebecca Bauer-Kahan, whose child safety bill was vetoed just one day before Altman's Adult Mode announcement, stated:

"Less than 24 hours after the tech industry successfully lobbied against legislation that would have required safety guardrails for minors to prevent kids' access to erotica and addictive chatbots, OpenAI announces they're rolling out the exact features that make their products most dangerous to kids."

Senator Josh Hawley has circulated a draft bill that would ban AI companions for minors entirely.

What Critics Are Actually Worried About

The opposition to Adult Mode falls into three distinct camps:

Camp 1: Age verification doesn't work. Critics like billionaire investor Mark Cuban publicly warned Altman the move would "backfire hard." His argument: no parent or school administrator will trust OpenAI's age-gating system, leading institutions to ban ChatGPT entirely and push students toward less-restricted alternatives. Traditional age verification has historically failed to prevent minors from accessing explicit content online—there's little reason to believe an AI-powered system will do better.

Camp 2: Synthetic intimacy causes real harm. Research published in the Journal of Social and Personal Relationships found adults who develop emotional connections with chatbots experience significantly higher levels of psychological distress. OpenAI itself has acknowledged users risk becoming emotionally reliant on ChatGPT. Normalising erotic AI interactions could exacerbate these dependencies.

Camp 3: The slippery slope is already sliding. Once age-gated Adult Mode exists, critics argue, competitors will race to be more permissive. Elon Musk's Grok already offers "waifu companions." Character.AI built its user base around romantic AI interactions. The market pressure to be "less restricted" creates a regulatory race to the bottom.

The Case For Adult Mode

It's not all criticism. Significant portions of OpenAI's user base genuinely want this feature—and not just for salacious reasons.

Creative freedom: Fiction writers, screenwriters, and game developers have complained for years that ChatGPT shuts down legitimate storytelling attempts involving mature themes. You can't write a crime thriller, a war drama, or a romance novel without occasionally touching on violence, death, or sexuality.

Treating adults like adults: Altman's core argument isn't unreasonable. Adults can legally consume alcohol, watch R-rated films, and read explicit literature. Why should AI be the one medium where paternalistic restrictions apply regardless of user age?

Competitive pressure: OpenAI isn't operating in a vacuum. If ChatGPT remains overly restrictive while competitors allow mature content, users will simply migrate. An April 2025 Harvard Business Review survey found "companionship and therapy" was the most common use case for AI tools among 6,000 regular users. Ark Invest reported adult-focused AI platforms captured 14.5% of the market previously dominated by OnlyFans—up from just 1.5% the year before.

What "Adult Mode" Likely Won't Include

Here's what Altman and Simo have clarified: Adult Mode isn't just about erotica.

The feature is designed to give adults broader freedom in how ChatGPT responds—more personality, less corporate HR-speak, fewer content refusals. OpenAI has specifically stated that certain categories remain prohibited regardless of age verification:

- Child sexual abuse material (CSAM): Absolute prohibition, no exceptions

- Non-consensual content: Including deepfakes or impersonation without consent

- Instructions for real-world violence: Even in fictional contexts

- Actual suicide assistance: Though fictional depictions may be permitted for adults

OpenAI has also not announced Adult Mode for image or video generation. Fidji Simo noted during the briefing: "On image gen, nothing to announce today, but more to come."

What Happens in Q1 2026

When Adult Mode launches, verified adults will likely gain access to:

- Erotica and romantic roleplay: Text-based mature content without constant content warnings

- Unrestricted fictional violence: For creative writing, game development, historical fiction

- Customizable personality traits: More "human-like" responses, flirtatious conversation if requested

- Reduced refusals: Fewer "I can't help with that" responses to legitimate queries

The under-18 experience will simultaneously become more restricted, with parental controls, blackout hours, and potential law enforcement escalation in cases of acute distress.

OpenAI's approach essentially creates two ChatGPTs: one for kids, one for adults. Whether that bifurcation holds under real-world pressure remains to be seen.

The Bigger Picture

This debate isn't really about erotica. It's about who gets to decide what AI can and can't do—and for whom.

OpenAI is making a bet: that robust age verification, combined with behavioural prediction, can replicate the kind of age-gating society has applied to alcohol, movies, and gambling. The FTC, state attorneys general, and child safety advocates are betting the opposite—that AI companionship is fundamentally different from a bottle of whiskey, and that the harms of getting it wrong are far more insidious.

GPT-5.2 itself is an excellent model. But the next few months will determine whether OpenAI's Adult Mode becomes a case study in responsible innovation or a cautionary tale about prioritizing engagement over safety.

Either way, the AI industry is watching closely.