Quick Answer: Stack Overflow's monthly questions crashed 78% in 2025, returning to 2008 launch levels. Indian developers (56% AI trust rate—highest globally) are leading the exodus to ChatGPT and Copilot. The catch? AI was trained on Stack Overflow's data, and now there's no new human knowledge being created.

The Sacred Ritual Is Over

For nearly two decades, every developer on the planet followed the same sacred ritual.

You hit a wall. You copy the error message. You paste it into Google. You click the first Stack Overflow link. You scroll past the question, find the green checkmark, copy the code, and pray it works.

That ritual is dead.

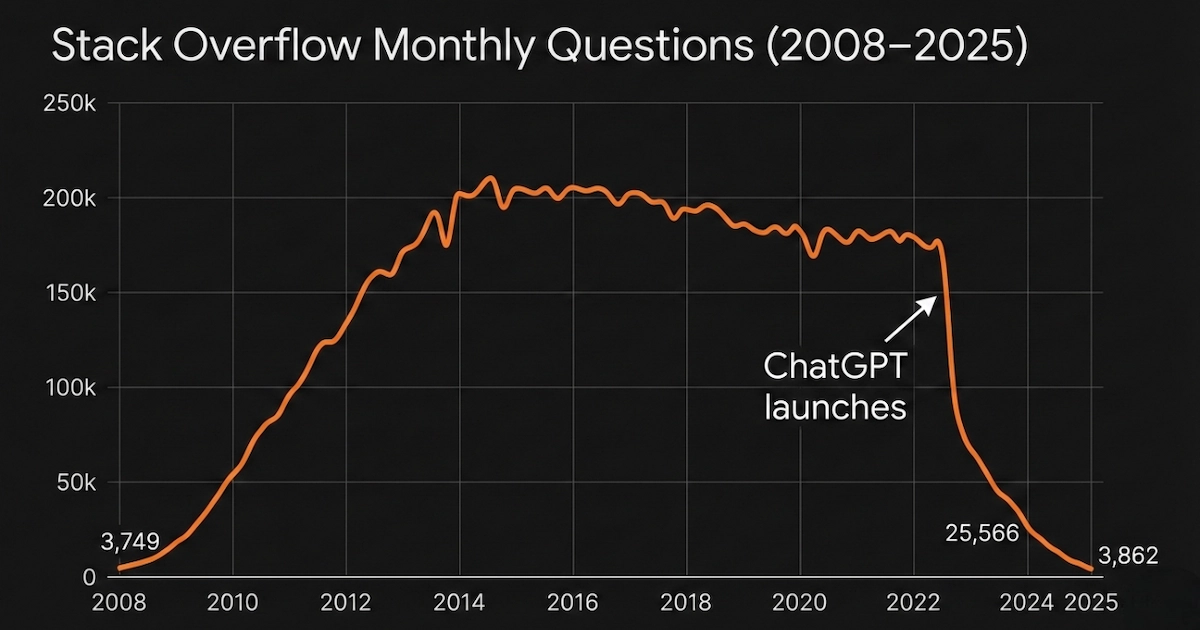

December 2025 saw Stack Overflow record just 3,862 new questions. To put that number in perspective, the platform received roughly the same volume in August 2008—its launch month. At its peak around 2014, Stack Overflow was fielding over 200,000 questions monthly.

Seventeen years of growth. Erased in 24 months.

The Numbers Don't Lie (They Scream)

Let's talk cold, hard data.

Between April 2024 and April 2025, Stack Overflow posts fell by 64%. Daily active users dropped 47%. Traffic plummeted from approximately 110 million monthly visits in 2022 to around 65 million by late 2025—and that number keeps bleeding.

From March 2023 to December 2024, monthly questions crashed from 87,105 to 25,566. That's a 70.7% collapse in under two years.

Here's the poetic irony: Prosus, a Netherlands-based investment firm, acquired Stack Overflow in June 2021 for $1.8 billion. The founders—Jeff Atwood and Joel Spolsky—sold with near-perfect timing, just before terminal decline set in. Within 18 months of that acquisition, ChatGPT launched.

Someone in that boardroom is still having nightmares.

Why ChatGPT Won (It's Not Just Speed)

The obvious answer is AI. ChatGPT launched in November 2022, and the graphs show an immediate cliff.

But here's what most analyses miss: Stack Overflow was already bleeding out before AI arrived.

Questions started declining around 2014. What happened then? Stack Overflow "improved moderator efficiency." Translation: they got really good at closing questions, marking duplicates, and downvoting anything that didn't fit their rigid standards.

The result? A platform that developers respected but never loved.

Ask any Indian developer about their Stack Overflow experience, and you'll hear the same stories. You post a genuine question. Within minutes, it's downvoted to oblivion, marked as duplicate (linking to a vaguely related question from 2011), and closed. If you're lucky, someone leaves a snarky comment about how you "didn't even try searching."

One developer on r/webdev put it perfectly: "I get more help on Discord in 5 minutes than I ever did from SO in 5 years."

AI didn't just offer faster answers. It offered judgment-free answers. You can ask ChatGPT the "dumbest" question imaginable, and it will politely explain concepts without making you feel like an idiot. Try that on Stack Overflow, and you'd be lectured about proper question formatting before being banned.

The Ouroboros Effect: AI Ate Its Own Parent

Here's where the story gets philosophically fascinating.

The AI models destroying Stack Overflow were built on Stack Overflow.

ChatGPT, GitHub Copilot, Claude—all of them ingested millions of Stack Overflow questions and answers as training data. The collective wisdom of 17 years of human developers, meticulously curated and upvoted, became the foundation for AI coding assistants.

Then those assistants made Stack Overflow obsolete.

In May 2024, Stack Overflow announced a partnership with OpenAI, allowing access to its OverflowAPI for model training. The community response was... not positive. Many saw it as the platform selling contributor-created content without consent or compensation.

The backlash intensified when Stack Overflow banned users who tried to edit or delete their own answers in protest.

Think about that. You spend years contributing knowledge for free. A company sells that knowledge to train AI. That AI takes your job and renders the platform useless. And when you try to reclaim your own work, you get banned.

This is the digital tragedy of the commons.

India's Role in the Shift

Indian developers aren't just participating in this shift—they're leading it.

According to Stack Overflow's own 2025 Developer Survey, developers in India show the highest level of trust in AI tools globally, with 56% either highly or somewhat trusting them. Compare that to Germany at 22% or the UK at 23%.

India is also among the top three countries responding to Stack Overflow surveys, alongside the US and Germany. With over 5 million developers and one of the fastest-growing tech workforces in the world, Indian sentiment matters.

The 2024 survey found that India had the highest AI favorability rating at 75%, tied with Spain. Indian developers aren't skeptical about AI—they're embracing it wholeheartedly.

And why wouldn't they?

A junior developer in Bengaluru or Hyderabad faces the same hostile Stack Overflow moderators as someone in San Francisco. But the cultural dynamics hit different. When you're already battling imposter syndrome in a competitive job market, having your genuine question publicly humiliated and downvoted to -15 isn't constructive criticism—it's demoralizing.

ChatGPT doesn't care if your English grammar is imperfect. It doesn't mark your question as "unclear" because you used the wrong technical terminology. It just helps.

The Moderator Strike That Said Everything

In June 2023, Stack Overflow moderators went on strike.

Not because the platform was too strict—but because it became too lenient on AI-generated content. The company issued a private policy telling moderators to stop removing AI-generated answers in most cases. The public version of this policy differed significantly from the private one.

Over 70% of Stack Overflow's active moderators joined the strike, which lasted until August 2023.

The irony was brutal. Stack Overflow's own moderators—the same people criticized for years as overly harsh gatekeepers—were fighting to maintain content quality against a flood of AI slop. And the company told them to stand down.

The platform that built its reputation on "high-quality, human-curated answers" was now prioritizing engagement metrics over accuracy. The moderators saw the writing on the wall: if AI-generated garbage floods the site, the platform becomes worthless.

They were right.

Where Are Developers Going Instead?

If not Stack Overflow, then where?

AI tools (obviously): 84% of developers now use or plan to use AI in their workflow. ChatGPT commands 82% adoption, GitHub Copilot 68%, and Google Gemini 47%. For Indian developers, these numbers trend even higher.

Discord: Stack Overflow's own 2024 survey revealed that Discord dominates among developers learning to code. Programming servers like Reactiflux have over 200,000 members. The catch? Discord conversations are ephemeral. There's no searchable archive for the next person with the same question.

Reddit: Programming subreddits like r/learnprogramming and r/webdev offer friendlier environments. You can ask "stupid" questions without getting publicly shamed. The community upvotes helpful answers without the toxic reputation-gaming that plagued Stack Overflow.

GitHub Discussions: For project-specific questions, GitHub's built-in discussion forums are increasingly popular.

The fragmentation is real. Knowledge that used to be centralized in one searchable repository is now scattered across private Discord servers, ephemeral Reddit threads, and AI model weights that can't be inspected.

The Hidden Cost Nobody's Talking About

Here's the problem nobody wants to acknowledge: AI models need fresh training data.

If developers stop asking questions on Stack Overflow, there's no new human knowledge for AI to learn from. The models stagnate. They keep serving answers based on 2023 best practices while the actual ecosystem evolves.

Already, experienced developers report that AI assistants struggle with newer frameworks and libraries. Ask ChatGPT about a cutting-edge tool released last month, and you'll get outdated information—or confident hallucinations.

Stack Overflow's decline creates a knowledge vacuum. Discord and Reddit discussions disappear into the void. Blog posts get buried by SEO spam. Documentation is often incomplete or corporate-sanitized.

We're entering an era where tribal knowledge returns. If you don't know someone who knows the answer, you might be stuck. That's a regression to pre-Stack Overflow chaos.

Stack Overflow's Pivot (And Why It's Controversial)

Stack Overflow isn't going down without a fight.

The company pivoted to Stack Overflow for Teams—an enterprise product that captured internal company knowledge. Revenue actually grew to $115 million in the 2025 fiscal year, up 17%. The platform isn't financially dead; it's just socially dead.

In November 2025, Stack Overflow launched "Stack Internal," rebranded from Stack Overflow for Teams, explicitly designed to export enterprise knowledge for AI training. The company confirmed licensing deals with AI labs "similar to Reddit's arrangements" (which generate over $200 million annually).

This means Stack Overflow is now in the business of monetizing knowledge—both the public corpus contributed by volunteers and private enterprise data.

The community implications are significant. Contributors who spent years building the free knowledge commons are watching that work get packaged and sold. No compensation. No opt-out. Just a terms-of-service update nobody read.

What This Means for Indian Developers

For India's developer workforce, this shift has real implications.

The good news: AI tools democratize access to coding help. You don't need to navigate Stack Overflow's toxic culture or worry about having your question closed. You can learn faster, ship faster, and build more.

The bad news: AI is only as good as its training. As Stack Overflow dies, the source of authoritative human knowledge dies with it. Future AI models might be trained on AI-generated content—a snake eating its own tail.

The practical reality: For complex architectural decisions, debugging weird edge cases, or understanding nuanced trade-offs, AI often fails. Many senior developers report that for truly hard problems, they still prefer human expertise—whether that's Stack Overflow archives, Reddit, or colleagues.

The developers who thrive will be those who know when to use AI and when to go deeper. Blindly trusting ChatGPT output is already causing problems in codebases worldwide.

The Verdict

Stack Overflow didn't die from a single wound. It died from a thousand cuts—years of hostile moderation, rigid gatekeeping, and a culture that prioritized "quality" over kindness—followed by the guillotine stroke of generative AI.

The platform's knowledge remains valuable. Those 17 years of human-curated answers aren't disappearing. But the living, breathing community that once powered the site? Gone.

For Indian developers especially, this marks the end of an era. Stack Overflow taught a generation how to code, how to debug, and how to ask better questions (even if it was harsh about it). That education happened at scale, for free, accessible to anyone with an internet connection.

What replaces it won't be free. AI assistants require subscriptions or API costs. Enterprise knowledge platforms require enterprise budgets. The open commons is closing.

Stack Overflow's story is a cautionary tale about what happens when you forget that communities are made of people—and people have options.

We'll update this article as Stack Overflow's Q1 2026 numbers emerge. If the December trend continues, we might be writing an obituary instead.