Samsung & OpenAI: Building the AI Infrastructure of Tomorrow

The Announcement

On October 1, 2025, Samsung (alongside SK Group) and OpenAI signed a Letter of Intent (LOI) to collaborate on AI data center infrastructure. This isn’t just Samsung helping with chips — the partnership spans advanced memory, data centres, shipbuilding, and even floating data centre concepts.

In Samsung’s own words, the collaboration will “bring together industry-leading technologies and innovations across advanced semiconductors, data centers, shipbuilding, cloud services and maritime technologies.”

What’s going on under the hood is about more than just volume — it’s about rethinking how future AI infrastructure gets built, deployed, cooled, powered, and scaled.

Why This Matters (Beyond the Hype)

Let’s get real: when two giants like Samsung and OpenAI talk infrastructure, it's not just press releases. Here’s what’s at stake.

1. Memory is the bottleneck

Modern AI models (especially large language models and vision models) are memory-hungry beasts. High-bandwidth memory (HBM) and advanced DRAM are critical for feeding GPUs / accelerators fast enough.

Samsung and SK Hynix have agreed to supply memory to OpenAI’s “Stargate” initiative — targeting up to 900,000 DRAM wafer starts per month. That’s more than scaling — it’s factory reorientation.

By locking in memory supply, OpenAI reduces a key risk in AI buildouts: chip and memory shortages. For Samsung, it cements a lead role in the backbone of global AI.

2. Data centers — many flavors, many challenges

Memory is just piece one. You still need power, cooling, footprint, networking, security, and modular scalability. The LOI covers collaboration across Samsung’s data-centre, cloud, and maritime arms.

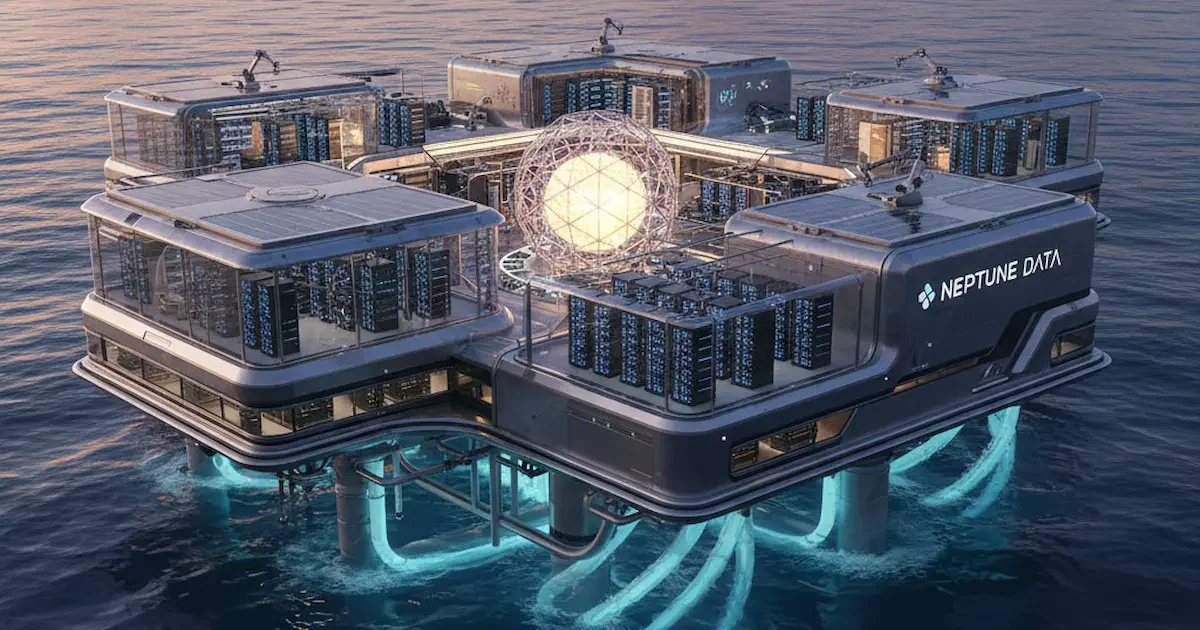

Interestingly, Samsung C&T and Samsung Heavy Industries will explore floating data centers — that is, data centres built offshore or on water platforms. The appeal? Less land constraint, potentially better cooling (water is a good heat sink), and maybe some flexibility in siting (e.g. near undersea cables). But it’s extremely challenging — consider reliability, maintenance, power/energy supply, and sea weather.

In parallel, SK Telecom has signed a Memorandum of Understanding (MOU) with OpenAI to build an AI data center in South Jeolla province (southwest Korea). That site could host a “Stargate Korea” node.

3. Strategic value & national ambition

This is a geopolitical and strategic play, too. South Korea wants to cement its role in global AI and semiconductors. By having Samsung serve as a key infrastructure partner, it strengthens both supply chain sovereignty and prestige.

For OpenAI, diversifying hardware partners and locations helps manage political risk, supply risk, and resilience.

Technical & Practical Hurdles

It’s not all clean lines and taping agreements. Here are the subtle (and not so subtle) challenges:

· Floating data centres: The “cool” idea (pun intended) comes with big engineering risks — mooring, wave motion, corrosion, power delivery (connection to grid), fiber links to land, maintenance, resilience to storms, etc.

· Energy & cooling: AI data centers devour power. Even if you're offshore, you still need energy supply (subsea cables? Onsite generation?) and ways to dissipate heat.

· Latency / connectivity: If your data centre is offshore or in a remote region, latency to users, backbone networks, and peering becomes crucial.

· Scalability & modularity: AI demands surge unpredictably. Designing modular, scalable data center nodes is key — but infrastructure (site prep, utilities) limits flexibility.

· Integration across businesses: Samsung is not monolithic in cloud / construction / maritime. Coordination across multiple divisions (SDS for cloud/IT, Heavy Industries for shipbuilding, C&T for construction) will test the organizational fabric.

· Execution timelines and capital: LOIs and MOUs are promises, not guarantees. The real test is whether they can build fast, rent out capacity, maintain uptime, and manage costs.

What This Means for India & Asia

You might wonder: “Cool, but why should I in Hyderabad care?” Let me connect the dots.

· Supply chain ripple: With Samsung further entrenched in AI infrastructure, component, memory, packaging, and ancillary supply chains may see increased demand. Indian players (in memory, server assembly, cooling tech) could get subcontracting opportunities.

· Regional competition: India, Singapore, Japan, China — all are vying to host AI infrastructure. Korea pushing “Stargate Korea” with Samsung + OpenAI may tilt some flows of investment or attention. India would need to accelerate incentives and policy clarity to compete.

· Learning & standards: The architecture, data center designs, cooling innovations, and floating tech that emerge could become reference blueprints globally — including for Indian data-center builders.

· Service & enterprise tie-ins: Samsung SDS (the IT arm) will also act as a reseller & integrator for OpenAI’s enterprise services in Korea. If this model succeeds, it could be adapted in other markets, potentially including India.

Verdict & What to Watch

Samsung + OpenAI is not just another collaboration — it’s ambitious, broad, and stretching across domains. It’s betting on a future where AI infrastructure isn’t just landlocked server farms, but dynamic, fluid, and globally distributed.

But hype and execution are different beasts. The real test will lie in:

1. Getting memory supply ramped reliably

2. Launching actual floating or novel data centers, not just designs

3. Managing cost, durability, and operational complexities

4. Scaling across geographies without bottlenecks

If they pull it off, they could rewrite how the world builds AI infrastructure.

If they fail, it’ll be a cautionary tale in overreach. Either way, it’s one to watch closely.