Google just fired a broadside at Meta's comfortable lead in the smart glasses market—and simultaneously reminded everyone that the AI race is far from settled. In a packed week of announcements, the company revealed its 2026 roadmap for Android XR glasses, rolled out Gemini 3 Deep Think for advanced reasoning, and dropped a complete overhaul of Google Translate powered by its latest AI models.

This isn't incremental improvement. This is Google saying: "We're not just catching up. We're redefining what these categories mean."

The Smart Glasses Gambit: 2026 Is the Year

At The Android Show: XR Edition held earlier this week, Google finally put dates on its smart glasses ambitions. The company confirmed that consumer-ready Android XR glasses will arrive in 2026 through partnerships with Samsung, Warby Parker, and Gentle Monster—a strategic mix of manufacturing muscle and fashion credibility that Meta's Ray-Ban partnership has proven essential for mainstream adoption.

Here's the kicker: Google isn't building just one type of glasses. They're planning two distinct categories to cover different use cases and price points.

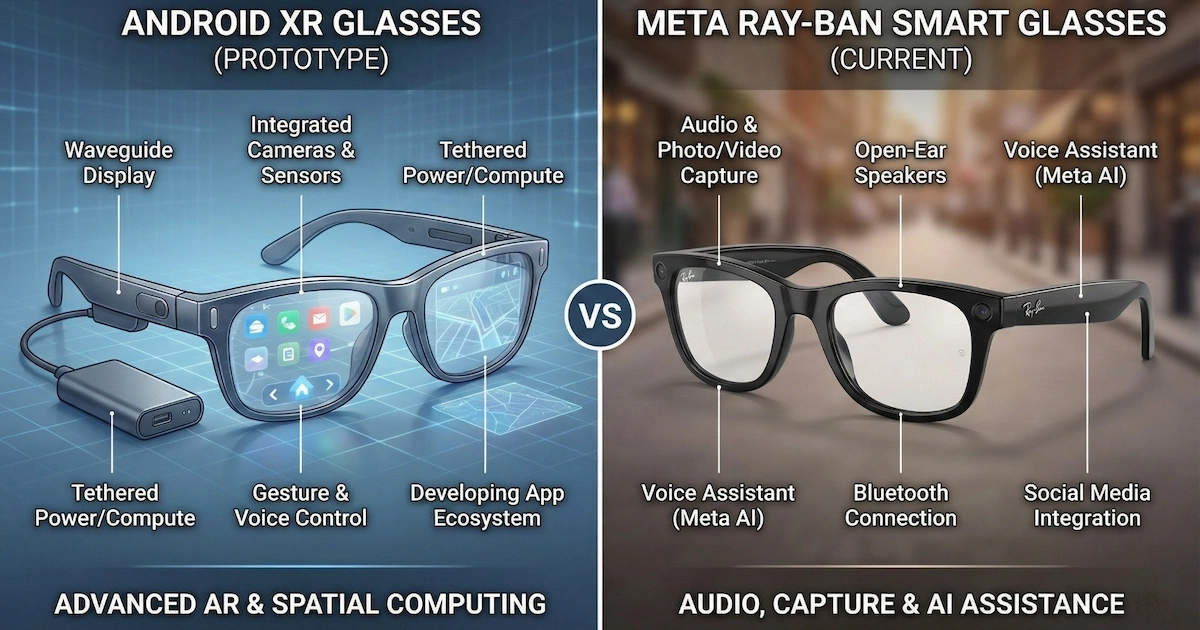

AI Glasses (Screen-free): These will feature built-in cameras, microphones, and speakers—essentially Google's take on the current Ray-Ban Meta formula. You'll be able to chat naturally with Gemini, snap photos, and get contextual help about your surroundings without any visual interface. Think of them as AirPods with eyes.

Display AI Glasses: The more ambitious variant adds an in-lens display for glanceable information—turn-by-turn navigation, live translation captions, message previews, all appearing directly in your line of sight. These glasses will also integrate with Wear OS watches for cross-device functionality, a feature Meta's ecosystem currently lacks.

Google VP Shahram Izadi demonstrated the display prototype at a TED Conference in April 2025, where attendees reportedly couldn't tell he was wearing smart glasses until he pointed them out. He showed live translation from Farsi to English and image recognition by scanning a book—all processed through a paired smartphone to keep the glasses lightweight.

"For over a decade, we've been working on the concept of smart glasses," Google wrote in its official blog post. "With Android XR, we're taking a giant leap forward."

Project Aura: The Hardcore XR Option

For users who want more immersion without a full VR headset, Google also unveiled Project Aura—wired XR glasses developed with XREAL that offer a 70-degree field of view (the largest optical see-through display XREAL has ever delivered) and optical see-through technology.

Unlike the consumer-focused Warby Parker and Gentle Monster designs, Project Aura connects to a tethered "compute puck" running the Qualcomm Snapdragon XR2+ Gen 2 chipset—the same silicon inside Samsung's Galaxy XR headset. The puck handles processing and battery while keeping the glasses relatively lightweight at around 80 grams.

The use case here is clear: portable productivity and entertainment. Google envisions travelers watching movies on virtual screens during flights, or workers placing multiple floating windows in coffee shops without disturbing others. The puck doubles as a trackpad, and battery life reportedly stretches to four hours.

Project Aura is scheduled for a full launch in 2026, though exact pricing and retail details remain undisclosed. XREAL has confirmed features like head and hand tracking, Sony micro-OLED displays, and electronic lens dimming.

Gemini 3 Deep Think: When AI Actually Thinks

While glasses grab headlines, the Deep Think announcement might matter more for everyday users of Google's AI tools. Rolling out now to Google AI Ultra subscribers ($250/month), Gemini 3 Deep Think represents Google's most advanced reasoning capability to date.

The key innovation is parallel reasoning—the model simultaneously explores multiple hypotheses before synthesizing a final answer, rather than pursuing a single chain of thought. Google describes it as their "most advanced reasoning feature," designed for complex math, science, and logic problems.

The benchmark numbers back this up:

- 41.0% on Humanity's Last Exam (without tools)—a challenging test measuring AI's ability to answer thousands of crowdsourced questions across math, humanities, and science

- 45.1% on ARC-AGI-2 (with code execution)—a test of novel problem-solving that Google calls "unprecedented"

- 93.8% on GPQA Diamond—measuring PhD-level reasoning

For context, the standard Gemini 3 Pro model scores 37.5% on Humanity's Last Exam without tools. Deep Think pushes that ceiling significantly higher by taking more time to "think through" problems.

Google also quietly revealed that an advanced Deep Think variant achieved gold-medal standard at the 2025 International Mathematical Olympiad—producing rigorous proofs directly from problem descriptions within the 4.5-hour competition time limit. This model took "hours to reason," unlike the consumer-facing version optimized for day-to-day usability.

The practical applications extend beyond academic benchmarks. Google says Deep Think excels at iterative development tasks—building complex features piece by piece, improving web development aesthetics and functionality, and tackling problems requiring strategic planning with step-by-step refinement.

Google Translate Gets Its Biggest Upgrade in Years

Perhaps the most immediately useful announcement: Google Translate now runs on Gemini, bringing dramatically improved handling of idioms, slang, and contextual expressions.

The old problem with machine translation was always literalism. Ask it to translate "stealing my thunder" and you'd get something about meteorological larceny. The Gemini-powered Translate now parses context to deliver translations that capture meaning, not just words.

This upgrade is rolling out in the US and India first, covering translations between English and nearly 20 languages including Spanish, Hindi, Chinese, Japanese, and German—available in the Translate app (Android and iOS) and on the web.

But the headline feature is live speech-to-speech translation via headphones. Connect any Bluetooth headphones to your Android device, open the Translate app, tap "Live translate," and you'll hear real-time translations that preserve the speaker's tone, emphasis, and cadence.

Google achieved this using the upgraded Gemini 2.5 Flash Native Audio model, which supports over 70 languages and 2,000 language pairs. The system handles multilingual input automatically—you don't need to specify which language is being spoken—and filters ambient noise for conversations in loud environments.

Two modes are available:

- Continuous listening: For lectures or group conversations where you want ongoing translation

- Quick translation: For brief exchanges like ordering food or asking directions

The beta is currently live on Android in the US, Mexico, and India. iOS support and additional regions are planned for 2026.

The Bigger Picture: Google's Ecosystem Advantage

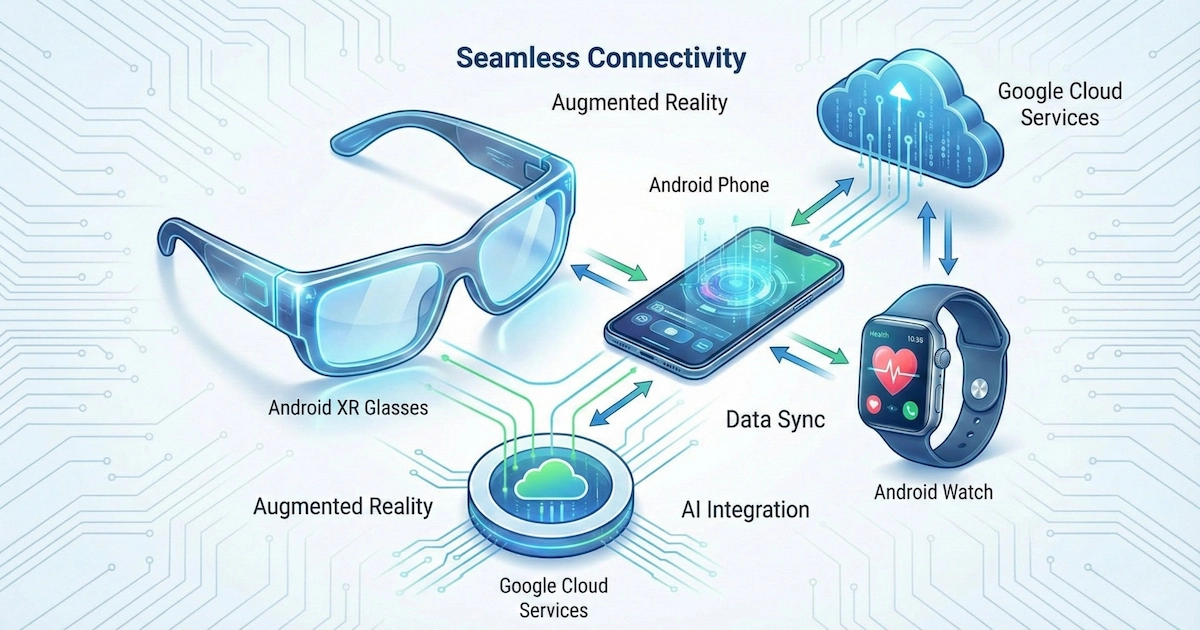

These announcements don't exist in isolation. Google is building an interconnected AI ecosystem where your glasses talk to your phone, which talks to your watch, which talks to Gemini—all sharing context seamlessly.

The Android XR glasses will pair with phones for heavy processing, sync photos with Wear OS watches for quick preview, and respond to voice commands through Gemini. The Translate upgrades make those glasses immediately more useful for travelers. Deep Think makes the Gemini you access through those glasses substantially smarter at complex reasoning.

Meta's Ray-Ban partnership proved consumers will wear AI glasses if they look good. Google is betting they'll prefer glasses that also plug into Android's broader device ecosystem.

What We Still Don't Know

Google left several critical details undisclosed:

- Pricing for consumer Android XR glasses from Warby Parker and Gentle Monster

- Battery life for untethered AI glasses (the display variants especially)

- Exact launch dates beyond "2026"

- Indian/European availability and regional pricing

- Prescription lens options—a significant factor for daily-wear glasses

The company also hasn't addressed privacy concerns around always-on cameras, though it mentioned gathering feedback on prototypes from "trusted testers" to build products that "respect privacy for you and those around you."

Should You Wait?

If you're currently happy with Meta Ray-Ban glasses, there's no urgency to abandon ship. Those glasses work, they look decent, and Meta continues improving their AI capabilities.

But if you're deep in the Android ecosystem—running a Samsung phone, Wear OS watch, and Google services—the 2026 Android XR glasses might offer integration benefits Meta can't match. The combination of fashion-forward designs from established eyewear brands, Gemini's improving intelligence, and native Android connectivity could be worth waiting for.

For the Translate upgrades and Deep Think, there's no waiting required. The translation improvements are rolling out now in the free Translate app. Deep Think requires a Google AI Ultra subscription at $250/month—steep for casual users, but potentially valuable for researchers, developers, and professionals tackling genuinely complex problems.

Google's message this week was clear: the company isn't ceding any territory in AI or wearables. Whether that ambition translates to products people actually want to buy remains the $150 billion question.