The man who brought down Elizabeth Holmes and exposed the $9 billion Theranos fraud just picked his next target. And this time, it's not a blood-testing startup — it's the entire AI industry.

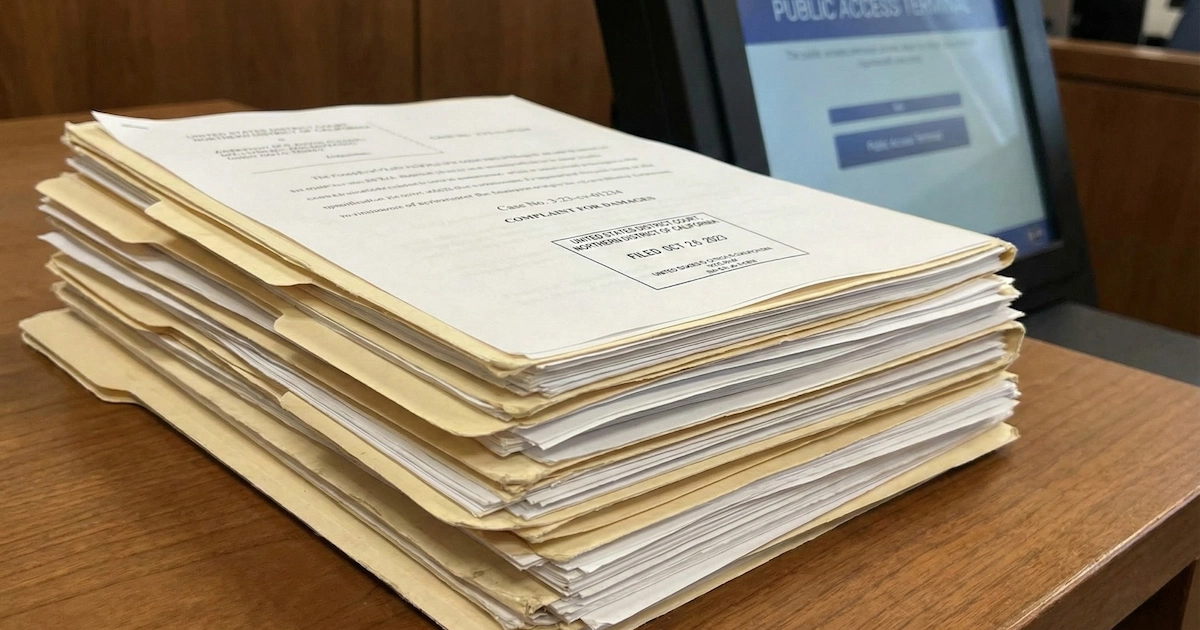

On December 22, 2025, John Carreyrou and five other authors filed lawsuits in a California federal court against six of the biggest names in artificial intelligence: OpenAI, Google, Anthropic, Meta, xAI (Elon Musk's company), and Perplexity. The allegation? These companies built their billion-dollar AI empires on stolen books.

Here's the thing nobody's talking about: this isn't just another tech lawsuit that'll disappear into settlement negotiations. It's the first copyright case to name xAI and Perplexity as defendants, and the plaintiffs are going for the jugular — demanding $150,000 per book from each company. That's $900,000 per infringed work.

The Piracy Problem at the Heart of AI

The lawsuit centers on something called "shadow libraries" — illegal online repositories like LibGen, Z-Library, OceanofPDF, and Pirate Library Mirror. These sites host millions of pirated books, and according to the complaint, every major AI company has been downloading from them.

Court documents from earlier cases revealed just how brazen this was. Meta employees reportedly torrented 81.7 terabytes of pirated books to train Llama. Internal messages showed one senior Meta researcher saying, "I don't think we should use pirated material. I really need to draw a line here." Another wrote that using pirated material should be "beyond our ethical threshold."

But it happened anyway. And according to the lawsuits, Meta wasn't alone.

The complaint alleges Anthropic downloaded over 7 million copyrighted books from LibGen and Pirate Library Mirror. OpenAI reportedly used these same sources for earlier versions of its models. Google, xAI, and Perplexity face similar accusations.

Why This Lawsuit Is Different

Sound familiar? It should. Anthropic just settled a nearly identical lawsuit in September 2025 for $1.5 billion — the largest copyright settlement in history. Authors received roughly $3,000 per book.

But here's where it gets interesting. Carreyrou and the other plaintiffs explicitly rejected that settlement. They opted out because they believe $3,000 is insultingly low.

The math tells the story. Under US copyright law, willful infringement can trigger statutory damages of up to $150,000 per work. The Anthropic settlement paid just 2% of that ceiling. These new lawsuits are demanding the full amount — from all six defendants.

"LLM companies should not be able to so easily extinguish thousands upon thousands of high-value claims at bargain-basement rates," the complaint states.

The other plaintiffs joining Carreyrou include Lisa Barretta (11 books on spirituality), Philip Shishkin (nonfiction on Central Asia), Jane Adams (psychology), Matthew Sack (tech professional), and Michael Kochin (political science professor). Their combined works span multiple genres, but they share one thing in common: all allegedly ended up in AI training datasets without permission.

The Fair Use Twist Nobody Expected

Now, wait. Before you assume this is an open-and-shut case against AI companies, there's a critical legal nuance.

In June 2025, US District Judge William Alsup ruled that training AI on copyrighted books actually qualifies as "fair use" — meaning it's legal. His reasoning? The process is "exceedingly transformative." AI models don't reproduce books; they learn patterns from them.

But — and this is the crucial part — Judge Alsup also ruled that how companies obtained those books matters. Downloading millions of titles from pirate sites? That's still copyright infringement, regardless of what you do with them afterward.

This distinction is why Anthropic settled for $1.5 billion despite "winning" on the fair use question. And it's why these new lawsuits have teeth.

The plaintiffs are essentially arguing: You can't build a billion-dollar business on stolen goods and then claim the end product is transformative. The theft happened the moment you hit download.

What This Means for Indian Users

If you're reading this in India and wondering why you should care about a US lawsuit, here's your answer: India is OpenAI's second-largest market outside the United States.

ChatGPT Go launched here at ₹399/month. ChatGPT Plus costs ₹1,999/month. Google's competing AI Plus plan just dropped to ₹199/month (introductory) or ₹399/month (regular). These aren't charity prices — they're strategic moves to capture India's 800+ million internet users.

But if AI companies face billions in damages, that cost has to come from somewhere.

Scenario 1: Prices go up. If OpenAI, Google, or others need to set aside reserves for legal liabilities, subscription costs could increase. The ₹399 ChatGPT Go tier — specifically designed for price-sensitive markets like India — might not survive.

Scenario 2: Features get restricted. Companies might limit certain capabilities (especially those involving text generation from learned patterns) in markets where licensing costs or legal risks are highest.

Scenario 3: Content deals become mandatory. We're already seeing this. OpenAI has signed licensing deals with publishers like The Atlantic, Axel Springer, and most recently, Disney. If courts rule that training on copyrighted content requires payment, expect more deals — and those costs flowing to users.

India is already grappling with these questions domestically. In November 2024, Asian News International (ANI) sued OpenAI in the Delhi High Court for using its news content without permission. The Federation of Indian Publishers, NDTV, Hindustan Times, Indian Express, and even Bollywood music labels like T-Series have sought to join similar actions.

In December 2025, India's Department for Promotion of Industry and Internal Trade (DPIIT) released a working paper proposing a "hybrid model" — mandatory licensing fees for AI companies training on copyrighted content, with royalties flowing to creators through a central body. It's one of the most interventionist approaches any country has proposed.

The Bigger Picture: Who Really Wins?

Here's the uncomfortable truth about these lawsuits. Even if the authors win — even if courts award the full $150,000 per work — the AI industry isn't going away.

Anthropic paid $1.5 billion and closed a $13 billion funding round the same week, valuing the company at $183 billion. OpenAI is reportedly trying to raise $100 billion at an $830 billion valuation. These companies have war chests that can absorb even massive settlements.

The Danish Rights Alliance, which helped take down Z-Library, put it bluntly: this fits a tech industry playbook of "grow first, pay fines later." The settlement becomes a cost of doing business — expensive, but not existential.

But there's another way to read this. Every settlement, every court ruling, every licensing deal incrementally establishes that training data has value. That creators deserve compensation. That you can't build on pirated content without consequences.

The Anthropic settlement set a floor of $3,000 per work. These new lawsuits are arguing for a ceiling of $150,000. The final number will land somewhere in between — and whatever it is will become the benchmark for every AI company going forward.

For Indian creators — authors, journalists, musicians, filmmakers — this matters enormously. If global precedent establishes that AI training requires licensing, India's proposed hybrid model suddenly looks less like regulatory overreach and more like the future.

What Happens Next

The lawsuit was filed on December 22, 2025. No preliminary hearing date has been set. But several things are likely:

Discovery will be brutal. The plaintiffs want internal communications showing what companies knew about piracy sources. We've already seen how damaging those emails can be in the Meta case. Expect more.

Settlements are probable. xAI or Perplexity, as smaller players with less legal firepower, might settle early to avoid discovery. The bigger companies — OpenAI, Google, Meta — will likely fight longer.

Appeals will happen. Whatever the outcome, this will be litigated for years. The fair use question alone could reach the Supreme Court.

Indian courts are watching. The ANI v. OpenAI case in the Delhi High Court is still ongoing. Any US precedent will influence how Indian judges interpret "fair dealing" under Section 52 of the Copyright Act.

The irony isn't lost on anyone. John Carreyrou spent years exposing a company that promised revolutionary technology built on fraud. Now he's arguing that the AI revolution was built on a different kind of theft.

Whether courts agree will determine not just who pays whom, but what kind of AI industry we get. One where training data is licensed and compensated? Or one where the piracy continues until someone forces it to stop?

For the millions of Indians using ChatGPT, Gemini, and Grok every day, that's not an abstract question. It's going to show up in your subscription price.

![6 AI Giants Sued for Book Piracy: What Indian Users Need to Know [2025]](https://techdodo.in/storage/articles/ai-copyright-lawsuit-2025-india_1766844083_694fe6b3261f7_original.webp)